BREAKING: Meta AI announces LLaMA: A foundational, 65-billion-parameter large language model

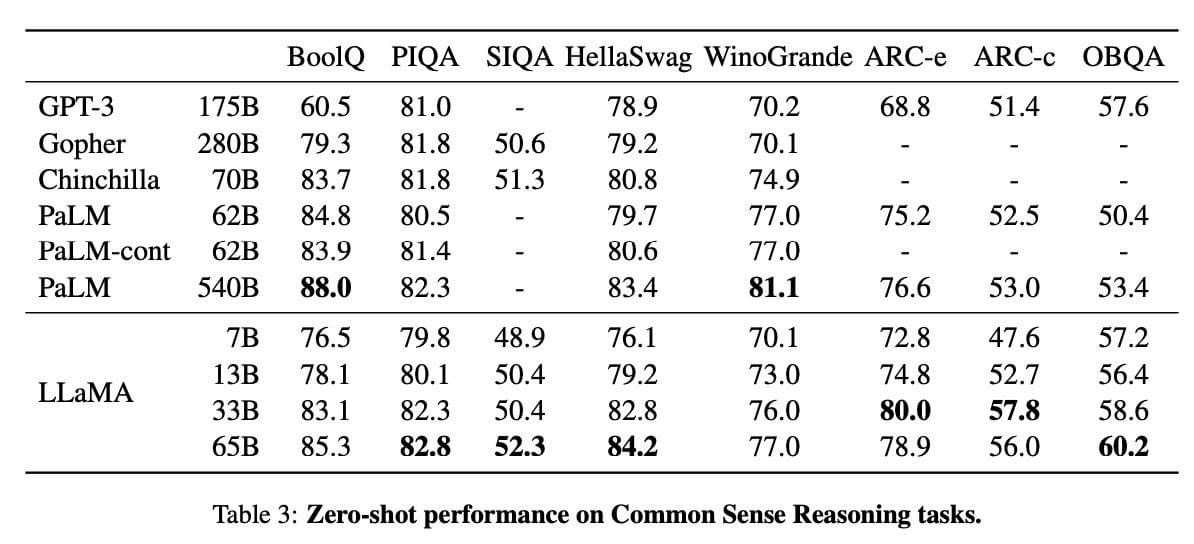

foundation models ranging from 7B to 65B parameters. LLaMA-13B outperforms OPT and GPT-3 175B on most benchmarks. LLaMA-65B is competitive with Chinchilla 70B and PaLM 540B.

The weights for all models are open and available at https://research.facebook.com/....publications/llama-o

Follow us for more -> Chat-GPT Insights